Technical

Written by Janka Bergner

15 July 2025

Whilst we see a wealth of opportunities arise from the rapidly evolving world of Generative AI, staying on top of new information can be overwhelming and challenging. This article aims to give an overview of what we've learnt about the different tools, as well as some guidance on how they can be used to speed up integration with our Access APIs.

Our experience to date with the most popular Large Language Models (LLMs) is that input determines output - the key to obtaining the best result lies in the provision of high quality input. This can range from quality context and guided flows to good supporting tools. When running inference on the trained data sets and web searches, results are often good but not great. For example, a request to integrate with an API to take eCommerce payments requires knowledge of the latest API specifications and which APIs to utilize given the users requirements. This could include complexities such as tokenization, repeat payments, authentication, locale or tax requirements, to name a few. This can result in a close but incorrect implementation, taking days if not weeks to debug.

Luckily, the industry is working to solve such complexities - by using Model Context Protocol (MCP) to guide LLMs. The Model Context Protocol (MCP) is an open standard and open-source framework launched by Anthropic in November 2024. It is designed to standardize how artificial intelligence (AI) systems, such as large language models (LLMs), connect with and share data across external tools, systems, and data sources.

As part of our innovation week we have created a small group consisting of three of our Engineers Sean Flynn, Olivier Chalet, Alejandro Baeza Gutierrez and our Architect, Simon Farrow. Our goal has been to create a full end to end integration using Gen AI, creating an MCP server, and publishing our findings for everyone to use and build on. We have started by choosing one of our most common use cases of "Taking, Querying and Managing a Payment" and exploring what tools could support us in our journey. Here is what we have found:

Before using our tools you need to ensure you have your Access credentials to be able to connect to our APIs. Contact your Implementation Manager for help with this

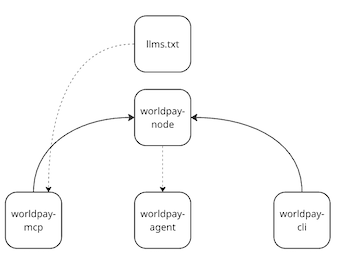

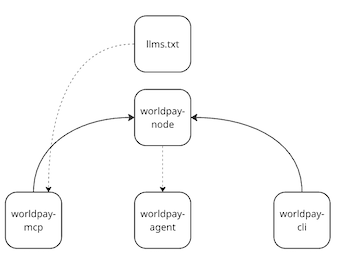

We have structured our tooling as follows, you can use as little or as much as required, each building on the first:

- SDK - this is our base SDK written in Node JS which provides foundational functions

- LLMs.txt - this is an LLM friendly version of our documentation

- MCP Server - this is our server you can hook up to your favorite AI to help with writing code against our APIs, or performing actions on your behalf. This uses both the SDK and LLMs.txt functions and context to help guide your AI.

- CLI - this presents the SDK functions as CLI actions, to say, query my payments or generate me a webhook test

Purpose: If you are building a node back end to take payments, you can use our SDK to easily integrate. For example the node SDK provides functions such as takePayment or queryPayment which will be used by the MCP server and CLI.

- Visit our github repo and clone the repo or

npm install @worldpay/node

Purpose: This is essentially the markdown version of any content, allowing the LLM client to strip off any unnecessary information. It allows information to be fed into the LLM client in a more structured way, producing more accurate reads.

LLM.txt is enabled in our documentation for all existing products. We have seen good results when utilizing llms.txt to provide context to the LLM client, but we will continue to optimize our content for machine use. Providing complete request examples, ensuring the header structure is respected etc.

See /products/ai/llms/index.md to retrieve our content in markdown.

Purpose: An MCP server, which stands for Model Context Protocol server, is a crucial component in the field of generative AI. Its primary purpose is to act as a bridge between AI applications and external data sources, tools, and services. In short, it is a protocol specification which standardizes access to a myriad of specialist tools for the LLM.

It aims to make the integration more intuitive by using natural language over code. We have therefore built an MCP server you can use to help with integrating and using our suite of APIs, from creating user interfaces on web or mobile, capturing payment details securely, to making payments. The set of use cases will grow as we add them on an ongoing basis.

For instructions and the latest supported tools go to our Worldpay MCP section.

As we learn about the use of MCP servers, we have found that some tools provide better support than others, to run the server smoothly. The following recommended tools worked well for us at the time of article publication. This list is not exhaustive and there are many other tools you can use.

- Claude Desktop with Sonnet 4 - was voted favorite for overall support

- Continue.dev and Cursor.ai - were close followers

- Ollama - worked great for running local models

Also useful is this list of applications that support MCP integrations and the MCP TypeScript SDK repo.

Purpose: CLI provides a text-based way for you to interact with a computer program or operating system, instead of using the interface. It allows you to issue commands by typing them into a terminal or console, which are then executed by the system.

The CLI enhances AI usage for users by enabling automation of tasks, efficient resource management, and faster execution compared to graphical interfaces.

Users can script and automate AI workflows, manage servers from anywhere, quickly test and update models or contexts, access logs for troubleshooting, and share reproducible scripts—all of which streamline AI operations with MCP servers.

Our CLI is available on the npm.js as "@Worldpay/cli". Use via npx @Worldpay/cli or npm i -g @Worldpay/cli.

As we learn, we're adding more use cases for you. You can see the list of currently support tools in our MCP section.

Our plan is to keep you along on our discovery journey by building out more use cases, finding more tools and giving you insight through our articles. So watch this space.